Did you know that your code is more likely to contain defects when it is committed before lunch?

Why do software estimates usually overrun? How do you decide if the business would be better off delighting 100 users or making 50 users less frustrated?

Whether in a technical or managerial role, engineering, product, and business leaders all have to make countless decisions as part of their day-to-day jobs. Some of these decisions are insignificant, while others can have a major impact. Ultimately, the success of the business – and our careers – heavily depend on the choices we make.

In theory, it’s easy to understand the importance of improving the quality of our decisions. But, in practice, every choice we make has great potential to be affected by our cognitive biases – aka automatic thinking. Cognitive biases – invisible forces that trip us up through error and prejudice – are like optical illusions. While we fully believe in what we are seeing during the illusion, we only realize the skewing in our perspective with hindsight.

What I if told you…

…you just read this sentence wrong? That’s right. Your brain is a machine that jumps to conclusions, and, like most people, you probably didn’t spot the grammatical mistake the first time you read the heading.

Let’s explore four of the invisible forces that influence our decisions: availability heuristic, cognitive ease, anchoring, and confirmation bias.

1. Availability heuristic: if you can think of it, it must be important

One of the most essential biases to understand is the availability heuristic – our tendency to form judgments and evaluate likelihoods based on how quickly an example comes to mind. For instance, when you see news coverage of a plane crash, your feelings about the safety of flying will be temporarily altered.

For example, you may encounter this effect when:

- You read a success story about how the [insert new, shiny feature/framework] enabled faster, better results for a team and you alter your choices based on what you have just ‘learned’;

- You underestimate the riskiness of a roll-out and are overconfident because of how other recent roll-outs went fine;

- Alarms for a failing system component flood your inbox and it becomes the most ‘urgent’ thought of the day, regardless of what the impact of that component failing was; or,

- You are unnecessarily defensive and over-engineer a feature because you’ve recently read a lot of post-mortems and bug reports.

Why does this happen?

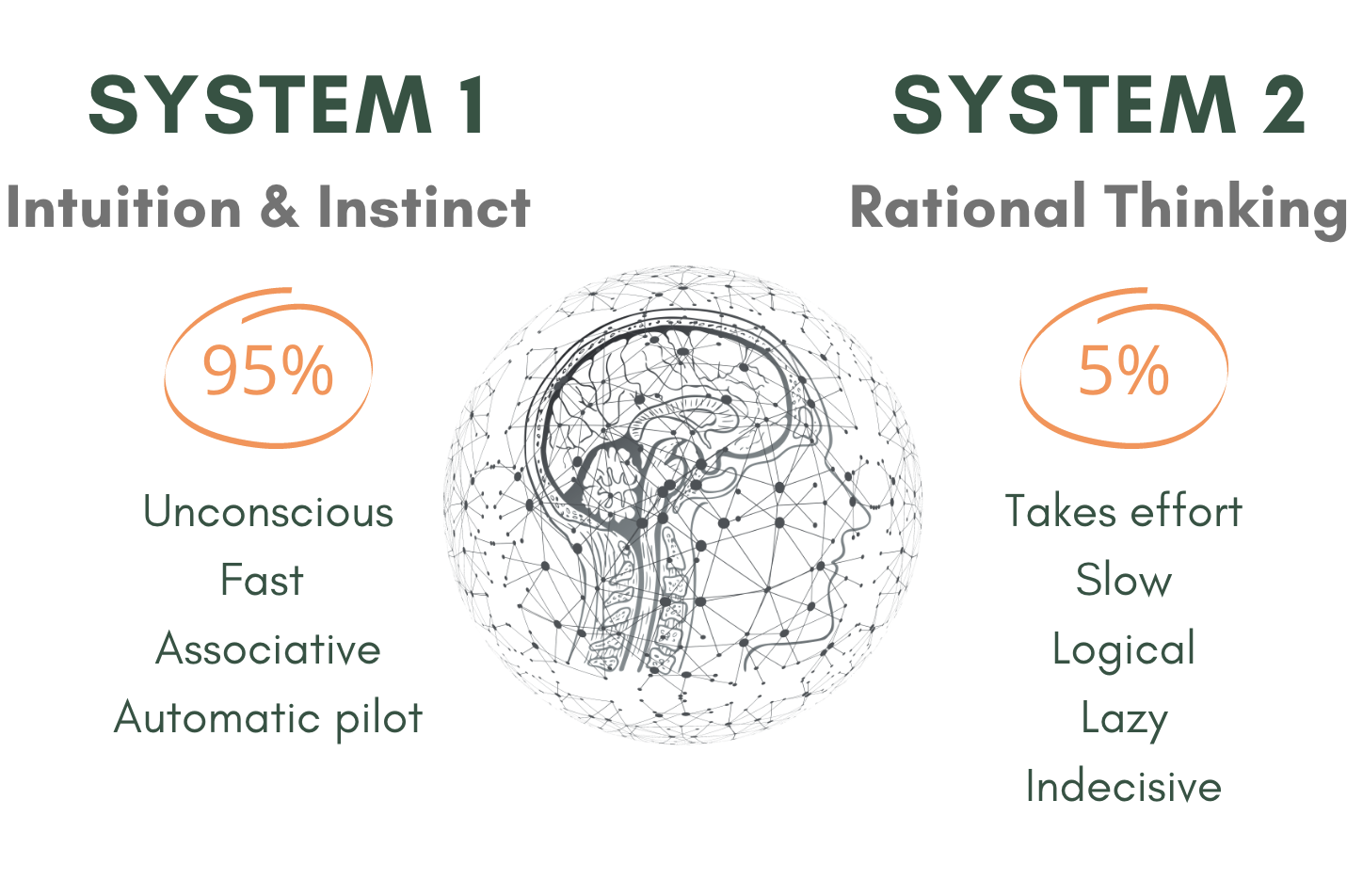

Nobel Prize-winning psychologist and economist Daniel Kahneman’s main thesis on ‘how we make decisions’ is that we operate on two modes of thought: System 1 and System 2.

System 1 is fast, automatic, and unconscious. System 2 is your logical mode: it operates in low energy, it’s effortful and slow. Psychologists believe that we all live much of our life guided by the impressions of System 1, and we often do not know the source of these impressions. Let’s illustrate this with an example.

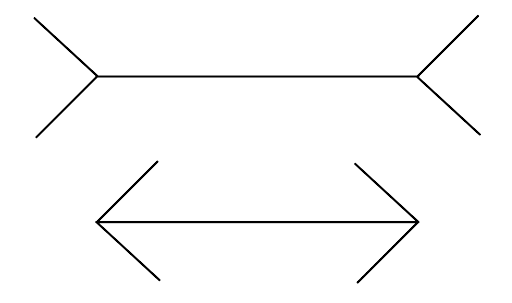

Which of the lines is longer?

We naturally see the top line as longer than the bottom one. That is what we see. If you were to measure the lines with a ruler, you can easily confirm they are of equal length. The difference between them is the fins attached to each end – they point in different directions. Now that you have measured the lines, your System 2 says: ‘I have a new belief’. If you were to be asked about their lengths, you will say they are equal. However, you still can’t prevent seeing one as longer than the other – System 1 is doing its thing. To prevent being fooled by the illusion, you must learn to distrust your initial impressions of the lines when fins are attached to them and recall the measurements you performed.

Can you recall the last time you had to make a choice, where one of the options was logically more plausible, but you kept being tempted by the other choice because of your initial impressions of it?

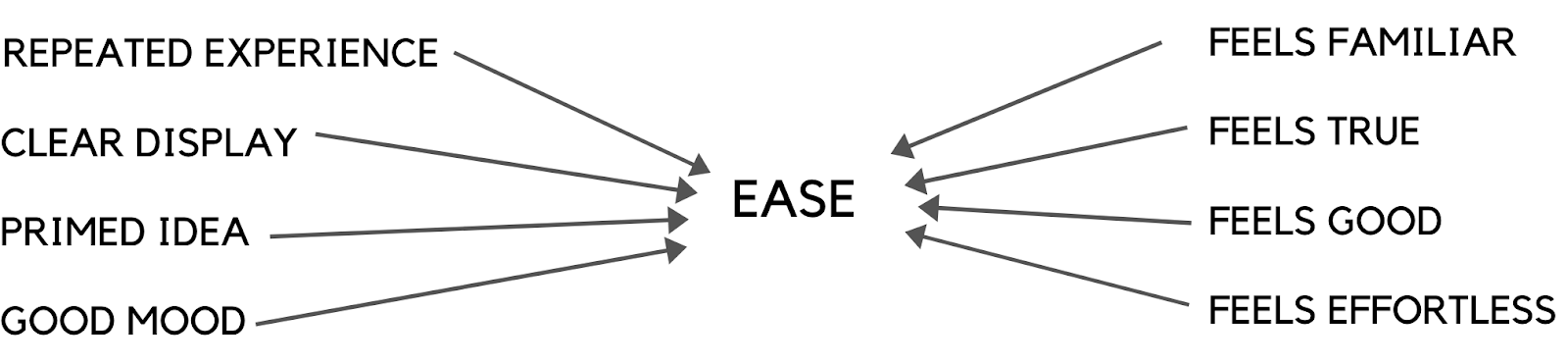

2. Cognitive ease: if it’s easier to perceive, it is the better solution

When describing the availability heuristic, I mentioned that we tend to form judgments based on how quick it is to think of examples. If we were to generalize this, System 1 always favors the cognitively easy path. In other words, when faced with a decision, you (and everyone else) tends to err on the side that is cognitively easier. To counter this effect, we must put our System 2 (logical thinking) into operation, which is slow and effortful. This is why, for example, when we are fatigued, hungry, or experience cognitive strain, we are more prone to accepting the involuntary suggestions of our System 1.

Think of the times when you had a long day of meetings or felt tired, hungry, or stressed. It is very likely that you’ve made a choice because it was cognitively easier to do so.

For example, you may encounter this effect when:

- You’ve been in a number of back-to-back calls, and you give up on trying to think of creative solutions and go with the defaults you’ve used in the past.

- Just before lunch, you are in a meeting and hungry, and you might have:

- gone with the first suggested solution to avoid thinking too hard;

- said the first thing (or executed the first thought) that came to your mind; or,

- chosen an easy, default option when faced with a decision.

On the other hand, we can leverage this mechanism to work in our favor as well:

- Bold fonts make your message more obvious.

- Clear and simple sentences are more persuasive.

- Repeating an idea will help the receiver warm up to it more (think of the re-displays of ads).

All of this is due to the fact that if/when you make information easier to receive, the receiver will be less likely to invoke System 2 thinking.

3. Are you anchored? If you have a starting point, it’s easier to limit your thinking

Anchoring is a prime example of how the cognitive ease of a reference affects our judgments.

‘How long will this feature take to implement, two weeks?’

If you have been asked such a leading question, or if you have been presented with a price tag of ‘$9.95’, you have been anchored to a reference point. 40-55% of our guesses are influenced by an anchor (a particular reference value or starting point). System 1, your machine for jumping to conclusions, performs its automatic, involuntary operation of favoring the cognitively easy path. Regardless of how logical you would like to be, System 2 has no knowledge and understanding of this operation and will be influenced by it. The accuracy of the number you are presented with, or the quality of the information in the anchor, is irrelevant – System 1 will try hard to influence your logical thinking.

Priming works similarly: one’s exposure to certain stimuli will affect and influence subsequent exposures to it. While it is difficult to counter the effects of anchoring and priming, here are a few instances showing how to use it to your advantage:

- When presenting a controversial idea, if you are seeking compliance, don’t aim to surprise anyone when you first present it. Rather, let them get used to it by giving glimpses of it beforehand and slowly unveil it over time. The reason for this is that the first time you are exposed to an idea, especially a controversial one, it will be liked the least because it will be least familiar. People’s associative machinery won’t have examples to draw from the first time they hear it. Introduce new, controversial ideas, slowly.

- The majority of people are not actively curating their own reference points during a conversation. That’s why it is important for you to set the reference point during a conversation, especially if you are describing something in terms of gains vs. losses.

On another note, since the start of this article, you’ve been primed to expect us to explore ‘four invisible forces’. And so you must be expecting to read about the last one now.

4. Confirmation bias: if it confirms what you already thought, it must be true

This is our tendency to use or favor information that confirms our pre-existing beliefs (also known as ‘wishful thinking’). System 1 will favor cognitive ease, act fast, and jump to conclusions when presented with a choice. If you don’t activate System 2, System 1 will choose to automatically favor an existing belief.

- Have you found yourself digging up a single metric to prove the success (or failure) of a feature (or component)? (That is until your teammate points out to you another metric that is negatively influenced.)

- Have you encountered tests that check how the system should work, instead of testing edge cases as well? (Until your tests pass, but you discover a bug in production.)

- Following a user research activity, have you noticed that your inferences confirm what you initially had in mind?

If you answered ‘yes’ to any of the above, you have witnessed confirmation bias in action.

We seek data that will likely be compatible with our current beliefs. To counter this:

- Define your metrics beforehand

- Test your hypotheses by trying to refute them.

Conclusion

Cognitive biases are not optional – we are all prone to errors of judgment. And understanding them doesn’t mean you can bypass them. Being aware of these invisible forces that affect your choices will give you an advantage in filtering out some of them. However, we must stay aware of them, especially in moments when we’re making a decision.

I have presented you with some of the most common biases I have observed during my day-to-day; however, there is so much more to this. Kahneman explores this field in great depth in his masterpiece, ‘Thinking, Fast and Slow’.

If there are takeaways from all of this, they are:

- Before making a choice, ask yourself if you could be choosing that option out of cognitive ease.

- If you are influencing someone else’s decision, make it as easy as possible for the receiver to make the decision.

Which one of these biases do you notice in your daily role?