Which commonly-used metrics aren’t working and why?

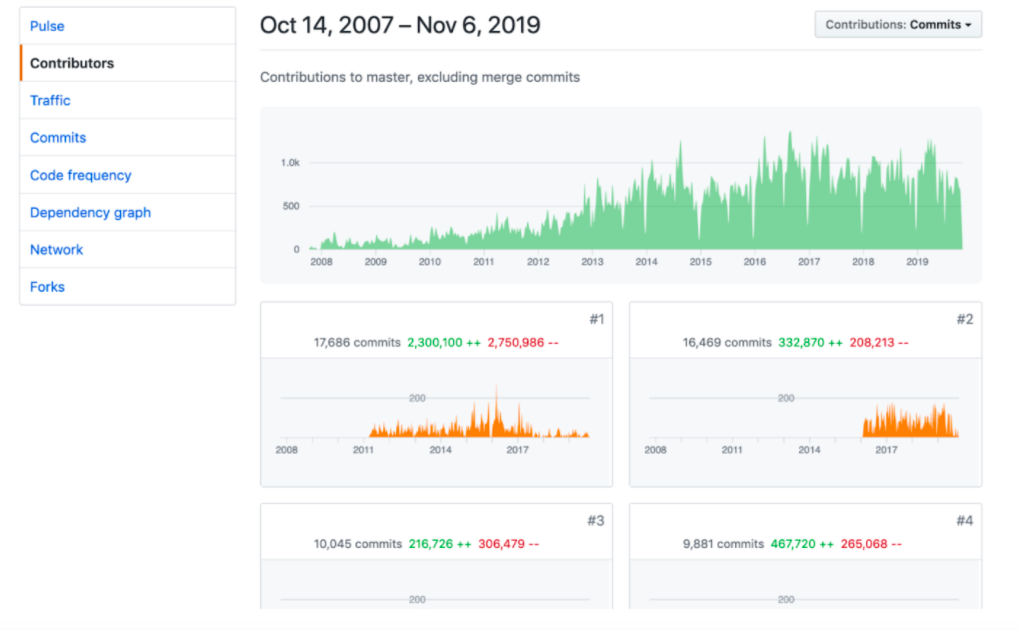

When I began researching engineering metrics several years ago, one of the first metrics I looked into were commits. While working in GitHub, they provided graphs showing commit counts so it was easy to track. I knew it wasn’t going to be the perfect metric, but it felt useful. For starters, if an engineer had no commits it would definitely be a red flag. If there are no commits, no code is getting shipped; on a software team, that’s a problem.

See how useful this metric is already? It gives you an awesome way to see how much work is getting done. And it gets even better. You see, developers that I spoke with agree that making small, frequent commits leads to better-designed code. And so if you increase your number of commits, that will, in effect, result in smaller, more frequent commits – which is great!

I thought I was onto something, so I showed it to some other CTOs and they thought it sounded kind of interesting. But then I brought it up with my dad over a family dinner – he thought it was garbage and said that no developer would ever want to be measured like this. For context, my dad had been a developer for 30 years. And throughout his career, he’d seen many situations where managers would roll out terrible metrics and anger everyone on the team. He told me that tracking commits was a horrible idea because it said nothing about the actual value of the work delivered. And if someone wanted to, they could easily game the metric by creating extra commits.

Number of commits don’t tell anything about the value and quality of those commits. Please don’t measure yourself with this poor metric.

— Jaana Dogan ヤナ ドガン (@rakyll) February 26, 2019

I’ve highlighted how commits are problematic, but it’s not the only metric that spells trouble. There are four others that I see a lot of companies using as a way to measure productivity. But these metrics are flawed. I call them, ‘the flawed five’. They are:

- Commits

- Lines of code

- Pull request count

- Velocity points

- ‘Impact’

Lines of code

The number of lines of code is a metric that has been around for decades – but it’s a really bad measure of productivity. For starters, there are different languages and formatting conventions that greatly vary in the number of lines of code they generate. So, three lines of code in one programming language might be exactly the same thing as nine lines in another.

Additionally, any good developer knows that they can code the same stuff with huge variations in lines of code, and that refactoring code (which is good) results in less code.

So not only is this metric inaccurate, but it incentivizes programming practices that are a counter to building good software.

Unfortunately, lines of code is still a really common metric used in our industry. I come across companies that use it as a way of evaluating developers’ contributions to their team, even determining stack ranking and terminations based on it. I think we’d all agree that this isn’t good practice, but it’s surprisingly common. We need to move away from this.

Number of lines of code written is not a measure of your value to a project.

— Scott Hanselman (@shanselman) April 13, 2019

Pull request count

Another metric that I see being used to measure productivity is pull request count. Counting pull requests seems to be a more recent trend – I was at a meetup last year and a manager said to me, ‘Pull request count is the new vanity metric’. And I completely agree with him. It’s not a good way of measuring how much work is getting done. Tracking the number of pull requests created or merged doesn’t factor in the size, effort, or impact of that work; it tells you almost nothing other than the number of pull requests created.

Like lines of code, this metric can encourage counterproductive behaviors. For example, this metric could encourage developers to unnecessarily split up their work into smaller pull requests, creating more work and noise for the team. I’ve seen this metric spreading like wildfire across our industry. A recent example I came across is GitLab’s engineering OKRs. These are published on their website.

In this OKR, their objective is to improve productivity by 60%. They intend to achieve and measure this by increasing the number of merge requests created per engineer by 20%.

I don’t think this is a good practice. Counting pull requests might seem less offensive than counting lines of code, but both metrics suffer from similar flaws.

Velocity points

Velocity points can be an unpleasant subject. I think a lot of developers see them as a necessary evil. Personally, I’m a big fan of velocity points and think that they can be an outstanding way of sizing and estimating work. However, when you try to turn velocity into a measurement of productivity, you will run into problems.

Jira tracks individual “productivity” using points per Sprint, thereby destroying the careers of highly productive people who happen to be working on very hard problems.

— Allen Holub (@allenholub) August 5, 2019

When you reward people or teams based on the number of points they complete, they are incentivized to inflate their estimates in order to increase their number. When this happens, it makes the estimates and the number of points you are completing, meaningless. In essence, as you start using points to measure productivity, points become useless for their designed purpose.

Impact

‘Impact’ is a new, proprietary metric offered by several prominent vendors in the engineering analytics space.

‘Impact’ is an evolved version of lines of code. It factors in things like how many different files were changes, and what number of changes were new code vs. changing existing code. All these factors are combined to calculate what is called an ‘Impact’ score for each developer or team.

I’ve observed many companies that have tried this metric, and developers almost always hate it. Not only does this metric suffer from the same flaws as lines of code, but it’s really difficult to understand because it’s calculated using a number of factors.

Then there’s the naming of it. Calling a metric ‘Impact’ sends a strong signal about how it should be used, particularly by managers. And this makes it very easy to misuse. Do you remember the story about my dad? This is exactly the kind of stuff he was terrified about.

Conclusion

Identifying metrics for developer productivity is difficult. There are many things we can measure in software, but few that we should. But why is it that these five metrics are still so prevalent? Why is it that we keep using them despite their flaws? What is the definition of developer productivity? And what metrics can we use? It’s time to work together to answer these questions.