Many of the old walls have come down between developers, operations, and security professionals, but there are still some steps you can take to build a truly effective culture.

The modern world quietly relies on millions of people developing and operating complex technical systems every day. Now, keeping those systems secure has become a board- or even government-level issue.

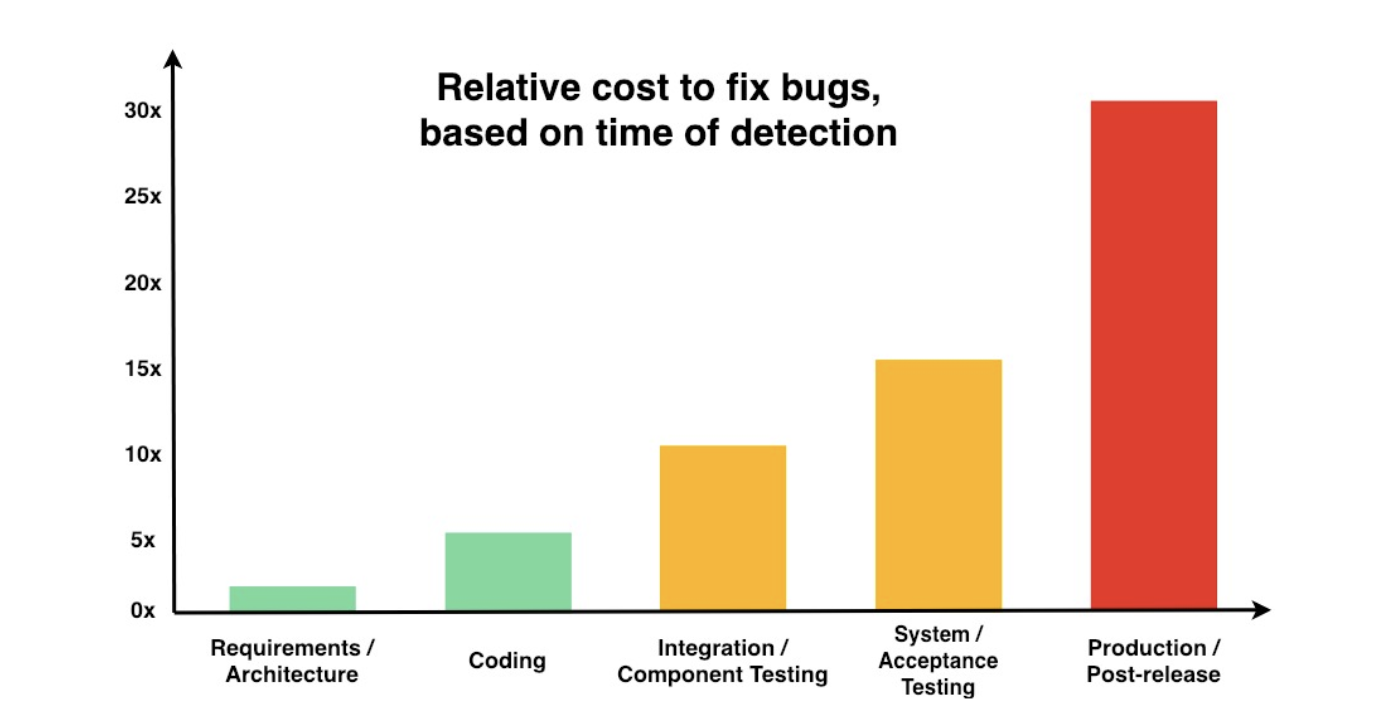

The difference between fixing a security vulnerability in minutes or weeks can have a marked impact on the brand reputation, market share, customer and investor relations, and even profit and stock price of your organization. Vulnerabilities fixed earlier in the development process are also easier and cheaper to fix, according to NIST (see the graphic below).

Cultural orientation

Cultural orientation

Speeding up fixes often requires a shift in organizational culture. There is ample evidence that people are more productive and make fewer errors when working in a culture that combines tough challenges with active support, such as coaching, communication, and collaboration.

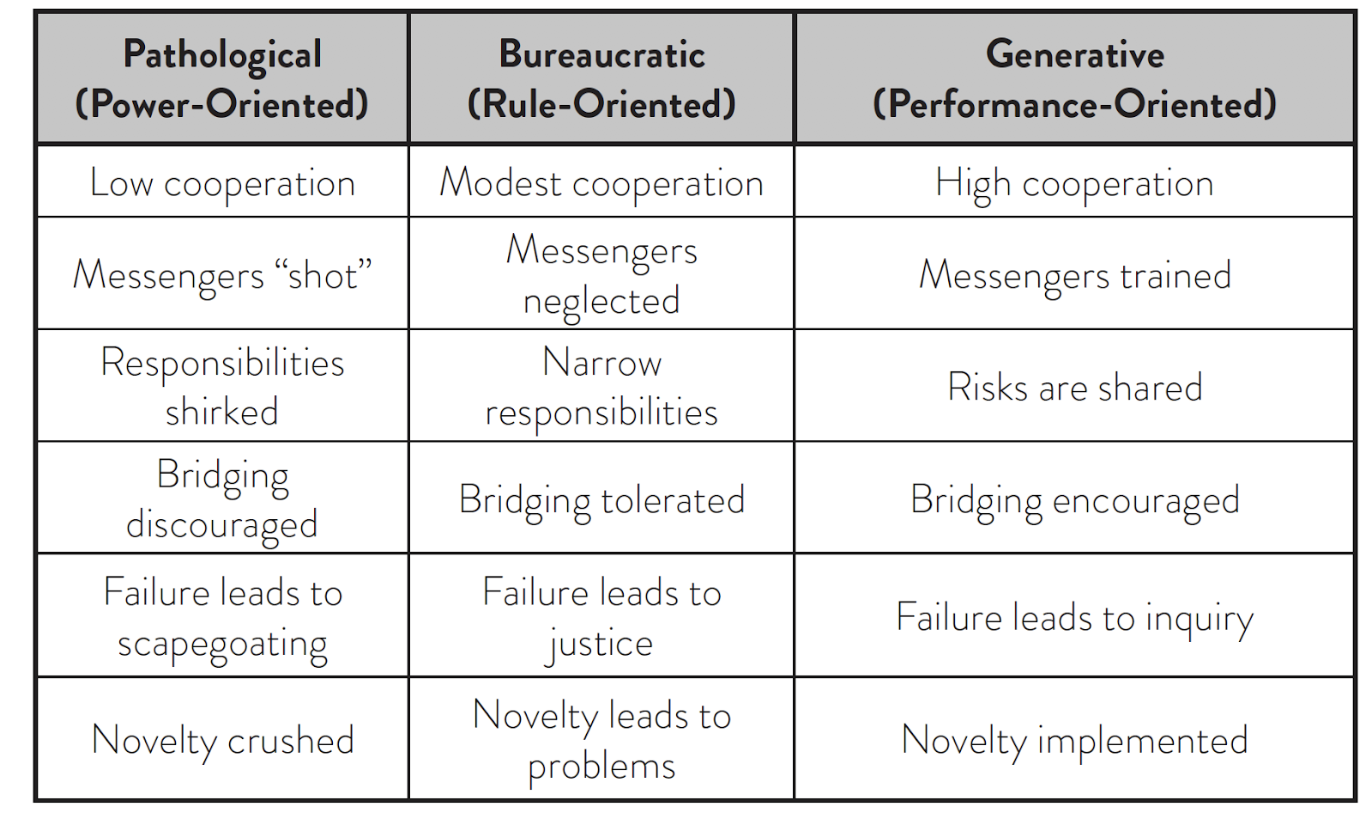

Research conducted by sociologist Ron Westrum in 2005 identified three common types of cultural orientation at IT organizations, those based on power, rules, or performance. The key attributes of each orientation are shown in the table below:

It would be unusual for an organization to have the same culture at every level, for example a division where messengers are ‘shot’ and novelty is ‘crushed’ (pathological) may also have pockets of innovation with high cooperation and shared risks (generative).

My first suggestion is to reflect on the default cultural orientation at your organization, and the extent that there are cultural differences across its divisions and teams.

When things go wrong

A great place to start your quest is to focus on what happens when a security vulnerability is identified. Are fingers pointed (pathological), do heads roll (bureaucratic) or are failures seen as an opportunity to improve the system (generative)?

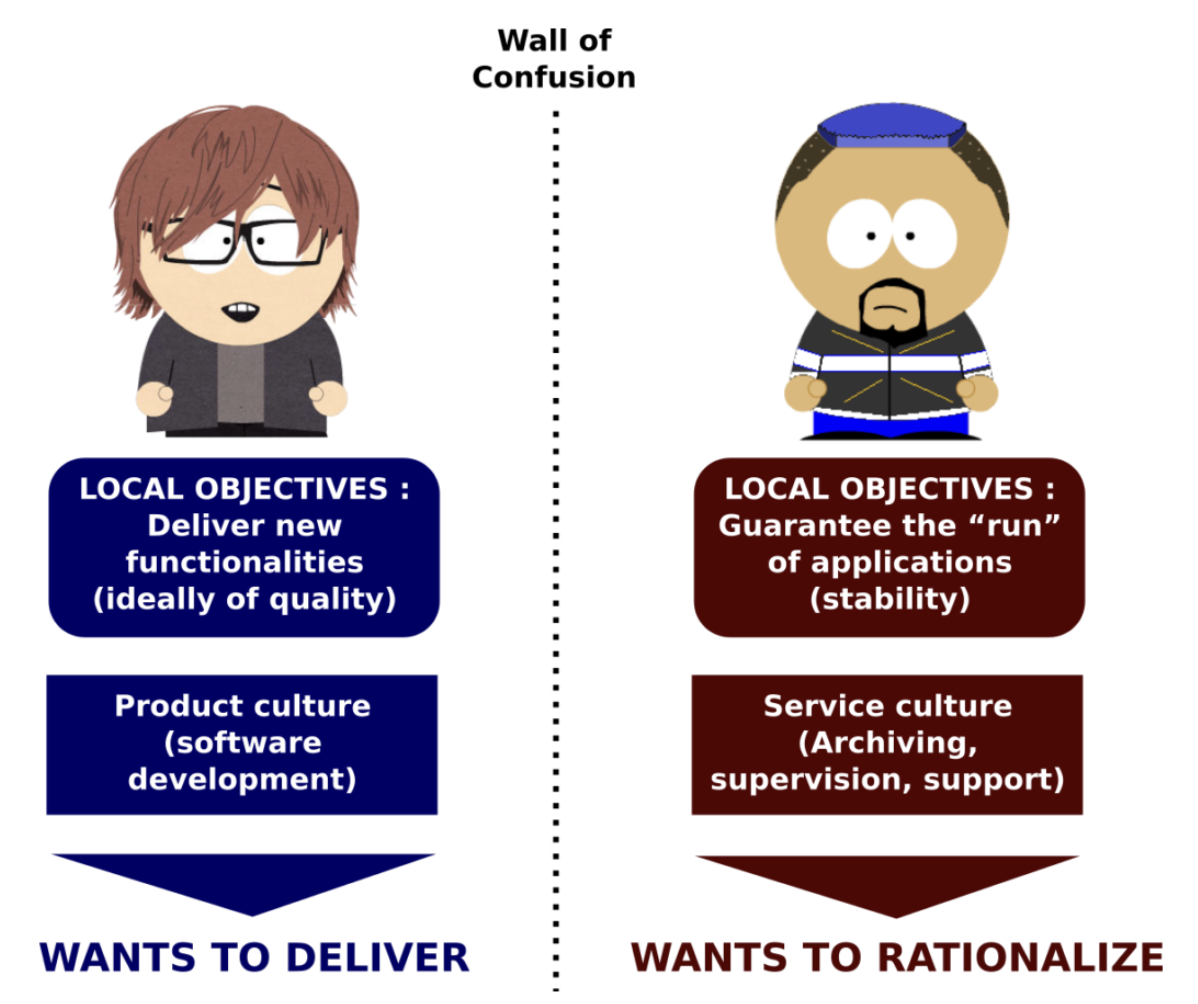

Context matters here. Historically, there was considerable tension, verging at times on friction, between developers and operations, sometimes known as the ‘Wall of Confusion’ (see below). Why? At the risk of oversimplification, developers are incentivized to release features fast, often working in sprints, whereas; operations want stability in production environments.

Historically, security teams maintained extensive lists of known vulnerabilities, would fail any build or halt any deployment perceived to be vulnerable, and then require developers to fix those perceived vulnerabilities.

This often resulted in them having to context switch from a project with a tight deadline to fix “vulnerabilities” that on inspection proved to be false. The outcome was that many developers faced not one but two walls of confusion – one with operations and the other with security.

Security incidents will happen, but try to avoid finger pointing and head rolling, in favor of conducting an AAR (After Action Review) after each incident to gain a better understanding of the ‘what, how and why’.

Invented by the US Military, AARs are very useful in defusing tensions, as they focus on improving systems and processes. Typical AAR questions include:

- What were the key milestones of the incident?

- What was the response from those involved when the incident happened?

- How could the incident have been prevented?

- How could the remediation process be improved?

For example, if an AAR were to identify that developers do not fully understand their security scan results, management could consider investing in suitable training to reduce the likelihood of vulnerabilities being overlooked in production.

Conducting an AAR after each incident is vital but no substitute for shifting to a generative culture that values trust, cooperation, innovation, and excellence.

My second suggestion is to reflect on how security and developers can best work together to create and sustain a more collaborative environment.

Management theory

Everyone has their own values, beliefs, assumptions, and mental models. Organizations are complex dynamic adaptive systems, so ensuring that there is mutual dependency between the organization and its people is paramount:

- Organizational culture (values, norms, assumptions, leadership style and so on) is heavily influenced by the behaviors of the individuals at the organization, and the level of development at which most of them are anchored.

- Culture in turn influences the design of systems and structures, and impacts individual and group performance, and commercial success.

- When employees interact, they influence each other, resulting in new forms of culture and innovation.

Shifting organizational cultures requires leaders to balance three people-related aspects (‘Individuals’, ‘Culture’, and ‘Relationships’) and three process-related aspects (‘Strategy’, ‘Systems’, and ‘Resources’).

Some leaders rely on detailed manuals; others on empathy and established behaviors – “this is the way we do things round here.” Irrespective of approach, all leaders need to:

- Be accessible and responsive to the needs of others.

- Acknowledge and value everyone’s contribution.

- Create opportunities for their people.

- Take the time to build trusting relationships.

- Be strong, have vision, and be comfortable with a hands-off approach.

Practical issues

Ideally, security wants to be a developer’s friend and help embed best practice.

To use a car analogy, security are the brakes that allow corners to be approached at speed, as well as the seat belts in the event of an incident. Focus on your positioning to developers and work on answers to these ten practical questions with your team:

- Have you automated security scanning in your pipelines?

- Have you provided bite sized vulnerability explanations?

- Do you have smart notifications and an empowered incident response team?

- Have you put in place processes that minimize manually handled incidents?

- Have you specified what should happen when a vulnerability is first identified?

- Can you improve your metrics beyond manually handling incidents over time and mean time to respond/fix?

- Are your vulnerability definitions easily understandable in multiple learning formats?

- Do you organize developer training to enhance secure coding skills?

- Are you making life easy and fun for developers through help buttons, prizes, hackathons etc.

- Are your security dashboards meaningful?

Future trends

Just because something is safe today, doesn’t mean it will be safe tomorrow. The implications of quantum computing may be beyond the reach of this article, but upcoming AI developments in DevSecOps could include:

- Using AI to ‘Explain this Vulnerability’ to provide comprehensible explanations and potential solutions to vulnerabilities.

- Embedding Security Incident Response Teams with AI capabilities to analyze data from logs, alerts, and other sources and detect anomalous behavior or potential security threats.

- Automated Security Testing and analysis, leading to faster and more accurate detection and remediation of vulnerabilities.

The term ‘generative’ is being hijacked by AI so it’s important to use it with care. Where a generative culture generates more productive people and more effective processes, generative AI can generate a false sense of security due to a propensity to include misleading but plausible data. Proceed with caution.

Explore the entire deep dive ‘Helping your DevOps teams meet rising user expectations‘