Discover the essential measurements that can inform your ongoing journey of continuous improvement.

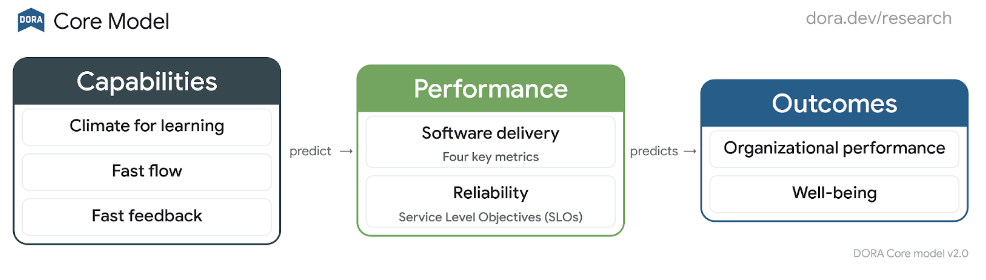

Technology-driven teams track their performance to help them understand where they are today, what to prioritize for improvement, and how to validate progress. DORA’s four software delivery metrics – the four keys – measure the outcome of teams’ software delivery, ultimately bringing about better organizational performance and happier team members.

But are DORA metrics right for your team? And how can you avoid common pitfalls when using them?

Throughput and stability

DORA’s four keys can be divided into metrics that show the throughput and stability of software changes. This includes alterations of any kind, such as changes to configuration and code.

Throughput

Throughput measures the velocity of changes. DORA assesses throughput using the following metrics:

- Change lead time: This metric measures the time it takes for a code commit or change to be successfully deployed to production. It reflects the efficiency of your delivery pipeline.

- Deployment frequency: This metric measures how often application changes are deployed to production. Higher deployment frequency indicates a more agile and responsive delivery process.

Stability

Stability measures the quality of the changes delivered and the team’s ability to repair failures. DORA assesses stability using the following metrics:

- Change fail percentage: This metric measures the percentage of deployments that cause failures in production, requiring hotfixes or rollbacks. A lower change failure rate indicates a more reliable delivery process.

- Failed deployment recovery time: This metric measures the time it takes to recover from a failed deployment. A lower recovery time indicates a more resilient and responsive system.

More like this

Key insights

DORA’s research program has been running for over a decade, gathering a lot of data on how these four keys interact with one another.

Throughput and stability were historically assumed to be in opposition to one another; there is a longstanding assumption that in order to be stable, teams must move slowly. The research from DORA invalidates this assumption. Their data shows top performers excelling across all four keys while low performers struggle.

These four keys can be used to measure mainframe applications, web applications, mobile applications, and even updates made to off-the-shelf software. Of course, teams should expect to use different methods and capabilities when building and delivering different technology stacks. Context matters but these metrics are broadly applicable.

When DORA is the right fit (and when it’s not)

DORA metrics provide valuable insights for teams focused on continuous improvement, identifying progress areas and tracking them over time. This culture may not suit all teams, so be mindful of if this is the right path for you.

Additionally, if releasing software is currently a painful, slow, or error-prone process for your team, DORA can help streamline and improve these workflows.

However, DORA metrics might not be the best fit for your team in certain situations. If your primary focus is cost reduction, DORA may not align with your goals, as it emphasizes value-driven approaches that prioritize speed and stability over cost-cutting measures.

Lastly, if delivering software changes is already easy, fast, and reliable for your team, tracking DORA metrics might be less beneficial. While these metrics can help ensure you stay on track, other areas could be more worthy of your attention. For example, you might explore opportunities to improve the reliability of your running application or enhance cross-functional collaboration within your organization.

The common pitfalls of applying DORA

There are some pitfalls to watch out for as your team adopts DORA’s software delivery metrics, including the following:

Setting metrics as a goal

One key mistake is setting metrics as a goal. For example, ignoring Goodhart’s law by making broad declarations such as, “Every application must deploy multiple times per day by year’s end,” can lead teams to manipulate the metrics instead of genuinely improving performance.

Having one metric to rule them all

We all love simplicity, but attempting to measure a complex system with a single metric is another pitfall to watch out for. Teams should identify multiple metrics, including some that may appear to be tradeoffs of one another, for example cost and quality. DORA’s software delivery metrics are a good start but teams may benefit from using additional metrics that are focused on the capabilities they’re improving. The SPACE framework can guide your discovery of a set of metrics.

Using industry as a shield against improving

Teams that work in highly regulated industries may claim that compliance requirements prevent them from moving faster. Avoid the pitfall of using industry or policy requirements as justification for why it is impossible to disrupt the status quo. DORA’s research has found that industry is not a predictor of software delivery performance; high-performing teams are seen in every industry.

Making disparate comparisons

These software delivery performance metrics are meant to be used at the application or service level. Each application has its own unique context where technology stacks, user expectations, and teams involved may vary greatly. Comparing metrics between vastly different applications can be misleading and may instill a sense of hopelessness in underperforming teams.

Having siloed ownership

Many organizations arrange teams based on their specialty. One team may be responsible for building an application, another for releasing that application to users, and another for the application’s operational performance. Distributing ownership for the four keys across these teams can create tension and finger-pointing within an organization. Instead, these teams should all share accountability for the metrics as a way to foster collaboration and shared ownership of the delivery process.

Competing

Healthy competition between teams can be motivating, but it’s easy to take this competition too far. The goal is to improve teams’ performance over time. Use the metrics as a guide for identifying areas for growth. Celebrate the teams that have improved the most instead of those with the highest total DORA scores.

Focusing on measurement at the expense of improvement

The data your team needs to collect for the four keys is available in a number of different places. Building integrations to multiple systems to get precise data about your software delivery performance might not be worth the initial investment. Instead, it might be better to start with having conversations, taking the DORA Quick Check (which helps assess how your team is performing today), and using a source-available or commercial product that comes with pre-built integrations.

Case studies

Liberty Mutual Insurance

At Liberty Mutual Insurance, an American property and casualty insurer, a cross-functional team of software engineers regularly reviewed their performance using DORA metrics. Jenna Dailey, a senior scrum master, shared how the team used DORA research to:

- Identify a bottleneck hindering their delivery performance.

- Transition to a test-driven development approach, leading to a significant reduction in change failure rate.

- Achieve tangible improvements in their overall performance.

Priceline

Priceline.com is an online travel agency that finds discount rates for travel-related purchases. DORA helped Priceline achieve a 30% reduction in the time needed to perform changes with regional failover by providing a framework for understanding and improving software delivery and operational performance. By implementing DORA capabilities, such as unified, reusable CI/CD pipelines, Priceline was able to deploy applications to production multiple times a day if necessary, with the added protection of built-in rollback capabilities. This increased stability led to at least 70% more frequent deployments and a significant reduction in the time needed to perform changes. The automation also provides faster feedback on changes allowing Priceline engineers to discover and mitigate issues earlier in the software development lifecycle, reducing the time spent on investigating possible production incidents by about 90%.

Next steps for engineering leaders

Once you’ve decided whether DORA metrics are the right fit for your team, think about how you can use them to make change happen at your org and then use these steps to guide your team:

- Set a baseline for your application using the DORA Quick Check.

- Discuss friction points in your delivery process. Consider mapping the process visually to aid in the discussion.

- Commit as a team to improving the most significant bottleneck.

- Create a plan, potentially including additional measures such as code review duration and test quality to act as leading indicators for the four keys.

- Do the work. Making change requires effort and dedication.

- Track progress using the DORA Quick Check, team conversations, and retrospectives.

- Repeat the process to drive continuous learning and improvement.

By understanding and applying DORA metrics, you can gain valuable insights into your software delivery performance and drive continuous improvement. Remember, the goal is to deliver better software faster, and DORA metrics provide the compass to get you there.